"it is the interpretations that are critical, rather than data itself."

His key interpretations are that an effect size d = 0.40 is the "hinge point" for identifying what is and what is not effective, and also is equivalent to advancing a child’s achievement by 1 year (VL 2012 summary p. 3).

There are also major issues with his methodology: he relies too heavily on correlation studies, many studies do not measure changes in achievement and meta-analyses do not consistently get the same results. As a result, the use of averaging across disparate results is questionable - see validity and reliability.

In addition, there are many errors, from misinterpreting studies to calculation mistakes and excessive inference.

This indicates that "teaching" like any other "people" science, is complicated and full of nuances. What works with one student or class or in one context may not work in another (the emerging field of Contextual Psychology also analyses this complexity). As Prof Jill Blackmore states,

"You may have a teacher in one class and they do really well and then you put them in another classroom or in a different school and they are not that crash hot. It’s all about the class …, it so complex… It should be about valuing teachers as professionals and giving them the capacity to make professional judgements" (p. 56).Whilst it may be simpler and easier to see teaching as a set of discreet influences, the evidence shows that these influences interact in ways in which no-one, as yet, can quantify. It is the combining of influences in a complex way that defines the "art" of teaching.

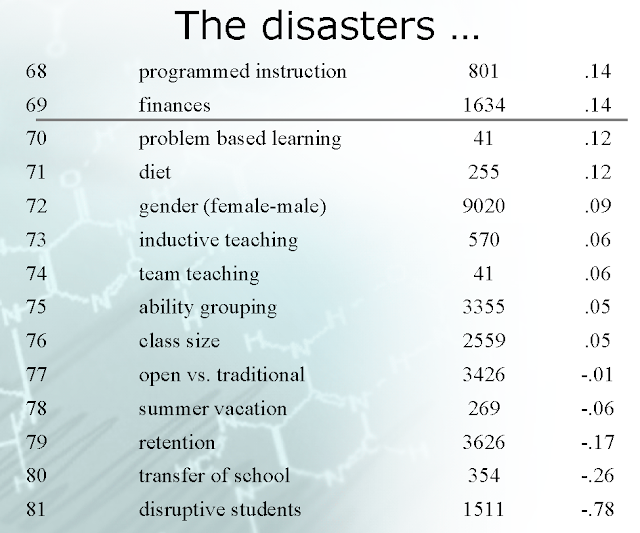

The notion that an influence is the "what works best" or a "disaster" (as Hattie presented in the 2005 ACER conference) is dangerous and misleading - not the least of which are the political ramifications.

Given the definitive statements that Hattie and his supporters make, it is important to bear in mind the critique by statisticians such as Professor Arne Kare Topphol:

"My criticism of the erroneous use of statistical methods will thus probably not affect Hattie’s scientific conclusions. However, my point is, it undermines the credibility of the calculations and it supports my conclusion and the appeal I give at the end of my article; when using statistics one should be accurate, honest, thorough in quality control and not go beyond one's qualifications.

My main concern in this article is thus to call for care and thoroughness when using statistics. The credibility of educational research relies heavily on the fact that we can trust its use of statistics. In my opinion, Hattie’s book is an example that shows that we unfortunately cannot always have this trust."The people and websites that proclaim, using Hattie's inferences, that class size effects, or diet effects or ability grouping, etc, are myths need to be challenged - have you read the studies? Are they measuring achievement? How are they doing this? Is the study a true experiment or a correlation? Are there discrepancies or confounds? What is the age of the students? What do the researcher's conclude? Is this consistent with Hattie's summary?

More caution is in order, as educational guru Andy Hargreaves says in his book, The Fourth Way:

"Even with all the best evidence in the world’s top hospitals, medicine remains 'an imperfect science', an enterprise of constantly changing knowledge, uncertain information, fallible individuals" (p. 34).Hattie seems to denigrate widespread teacher experience and his campaign "Statements without evidence are just opinions" (borrowed from Edwards Deming). This seems to raise the status of his research and belittle teacher experience further. However, teacher experience was seen as action research or case study. The Medical Profession still relies heavily on case study - usually one doctor with a patient under a particular set of circumstances. In fact, one of the studies that Hattie cites heavily, the New Zealand government funded extensive study by Alton-Lee (2003) promotes case study in conjunction with other research:

"It doesn’t treat statistical evidence as gospel truth leading to unarguable commandments, but rather as a process of interaction in a professional learning community where everyone wants to improve. Players on this professional team see data as their companion - not as their commander" (p. 37).

"It is with the rich detail of case studies that the complexity of the learning processes and impact of effective pedagogy can be traced in context, in ways that teachers can understand implications for their practice" (p. 13).After reading much of the research Hattie cites I am not convinced that it is possible to separate influences in the precise surgical way in which Hattie infers. I find Hattie's method and interpretation misses much of the complex interactions of many of the influences in the classroom. In fact much of that research also

Math's Teaching:

My involvement with teaching over the last 40 years has been as a maths teacher and sports coach. Like most other teachers, I read about new ways, attend professional development and experiment with different techniques in my classes. Whilst my conclusions from that experience is anecdotal I feel it is still more valuable than the poor research Hattie has given us. My experience differs so much from what Hattie says are good or poor practices.

Firstly, class size DOES MATTER and the evidence shows this. In addition many of the strategies I use, e.g. problem-based learning, Computer-assisted instruction, Creativity, Simulations, Inductive teaching, Inquiry-based teaching, Visual/audio-visual methods, Web-based learning, Within-class grouping, Student control over learning, kinesthetic awareness and Teacher subject matter knowledge, work for large numbers of students yet they fall below Hattie's "worthwhile" point of d = 0.40.

Other, initiatives which contradict Hattie's interpretations are the Maths 300 project, The Maths Task Centre, and Prof James Tanton. As does Boaler's work on visualisations.

We also need to spend more time promoting and congratulating good teaching, like Maths Guru, Prof Steven Strogatz tweet about an Australian maths teacher.

Is Hattie’s Evidence Stronger than Other Researchers or Widespread Teacher Experience?

A summary of the major issues scholars have found with Hattie's work (details on the page links on the right):

- Hattie misrepresents studies e.g. peer evaluation in "self-report" and studies on emotionally disturbed students are included in "reducing disruptive behavior".

- Hattie often reports the opposite conclusion to that of the actual authors of the studies he reports on, e.g. "class-size", "teacher training", "diet" and "reducing disruptive behavior".

- Hattie jumbled together and averaged the effect sizes of different measurements of student achievement, teacher tests, IQ, standardised tests and physical tests like rallying a tennis ball against the wall.

- Hattie jumbled together and averaged effect sizes for studies that do not use achievement but something else, e.g. hyperactivity in the Diet study, i.e., he uses these as proxies for achievement, which he advised us NOT to do in his 2005 ACER presentation.

- The studies are mostly about non-school or abnormal populations, e.g., doctors, nurses, university students, tradesmen, pre-school children, and "emotionally/behaviorally" disturbed students.

- The US Education Dept benchmark effect sizes per year level, indicate another layer of complexity in interpreting effect sizes - studies need to control for age of students as well as the time over which the study runs. Hattie does not do this.

- Related to the US benchmarks is Hattie's use of d = 0.40 as the hinge point of judgements about what is a "good" or "bad" influence. The U.S. benchmarks show this is misleading.

- Most of the studies Hattie uses are not high quality randomised controlled studies but the much, much poorer quality correlation studies.

- Most scholars are cautious/doubtful in attributing causation to separate influences in the precise surgical way in which Hattie infers. This is because of the unknown effect of outside influences or confounds.

- Hattie makes a number of major calculation errors, e.g., negative probabilities.

No comments:

Post a Comment